November 11, 2025

Introduction

On the Scientific Computing (SciC) Linux Clusters, it is important to choose the correct queue so that your job is scheduled as quickly as possible and has access to the resources needed by your application. This is a list of the most common ERISTwo queues which apply equally for general use and for research groups with access to dedicated nodes.

Working with Queues

Set the queue to use with the "-q" option to bsub:

bsub -q normal < my_script.lsfOr by putting the same option in the header of your script:

#BSUB -q normal Specifying resource requirements

This example requests 4 CPU cores and 10GB RAM memory (specified in MB)

bsub -q normal -n 4 -R 'rusage[mem=10000]' < my_script.lsf The same options given in an LSF script:

#BSUB -q normal #BSUB -n 4 #BSUB -R rusage[mem=10000] Another example showing both memory requirement and memory limit settings, which are both needed for reservations of more than 40GB - here 64GB is reserved:

bsub -q bigmem -M 64000 -R 'rusage[mem=64000]' < my_script.lsf The same options given in an LSF script:

#BSUB -q bigmem #BSUB -M 64000 #BSUB -R rusage[mem=64000] Queues scripts examples

Several script templates are available on each home folder upon account creation, look for them on:

ls ~/lsf/templates/bsubIf you want to test it, copy each example on a different folder, for example on ~/lsf, and then submit the job as described on the example. Read each example for more detailed information.

If you have deleted your ~/lsf folder, you can copy it from /lsf/copy/templates.

Standard Queues on ERISTwo

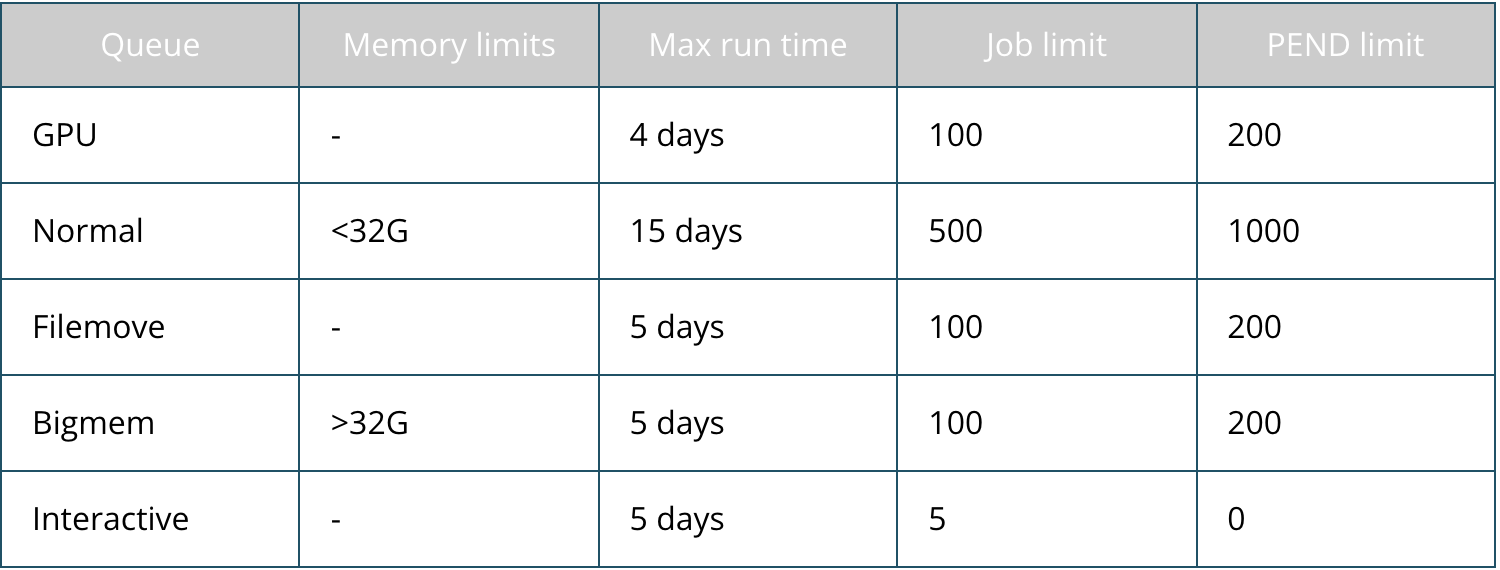

The job scheduler offers several job queues to which you can submit your jobs. Each queue is optimized for different types of job, based on:

- run time

- memory requirement

- number of CPUs used in parallel

Summary

gpu

The "gpu" queue has limited machines available. Users must request/renew access at @email.

- Maximum runtime is 4 days.

normal

The "normal" queue is fit for most cluster jobs.

- Maximum runtime is 15 days.

- Memory requirement should not exceed 32GB.

filemove

The "filemove" queue transfers files in/out ERISTwo to an external mount.

- Maximum runtime is 5 days.

bigmem

The "bigmem" queue is suitable for jobs with more than 32GB memory requirement.

- Maximum runtime is 5 days.

- Memory limit must also be set equal to memory reservation if more than 40GB.

Additional Queues

Queue for an interactive command line session

To open a login session on a compute node use the following:

bsub -Is -q interactive /bin/bash Remember to request more than one job slot and additional memory if multi-threaded/large memory applications are to be run in the session (eg 64GB, 4 concurrent CPUs):

bsub -Is -n 4 -R 'rusage[mem=64000]' /bin/bash

Priority node allocation

Labs that have priority nodes through the "Adopt-a-node" program get priority access to those nodes by submitting to their jobs to the corresponding group queue.

A single queue is set up per group. Several queues can be requested per group to the administrators.

Other requirements

Please contact Scientific Computing if none of the above queues fit your requirements.